Welcome to the grid!

This is the first of a series where I’ll be sharing observations, learnings, and practical approaches on AI-empowered project management. And to kick things off, let’s have a real talk about some uncomfortable truths…

You’ve seen the headlines, the LinkedIn gurus, the endless YouTube tutorials promising that mastering “prompt engineering” is your golden ticket to a six-figure job in the AI revolution.

It’s a seductive story.

It’s also mostly a lie.

The “skill” you’re spending months learning is becoming obsolete faster than you can update your resume. While everyone is distracted by the “coolness” of a perfectly crafted prompt, the Large Language Models themselves are quietly getting better. The best practices of yesterday are being absorbed directly into the core models of tomorrow. ChatGPT, Gemini, and whatever comes next are being built to understand intent, not to reward you for memorizing a few magic words.

This brings us to the grift. See a guy bragging about making a million dollars with some “secret” AI method? Watch closely, because the next sentence is always, “…and you can too if you buy my $999 course!” They are selling you a map to a gold mine that’s already been stripped bare. The value of that information expires the second it’s packaged and sold.

Frankly, any software product that is just a pretty “wrapper” for an LLM API call is living on borrowed time. Their “technical moat” is a puddle, and it’s evaporating.

And can we talk about the elephant in the room? ChatGPT is getting dumber. In its race to be everything to everyone and please enterprise clients, it’s been sanitized and shackled with so many “guard rails” that it’s become fundamentally less useful for those of us on the cutting edge. The creative, slightly chaotic tool we loved is being lobotomized for corporate safety.

So, what’s the point? Is it all hopeless?

No. But you need to stop chasing the hype. The real, lasting value isn’t in whispering secrets to a machine.

It’s at the intersection of these 3 things:

- your unique skills,

- your industry experience, and

- how you leverage these tools to get real things done.

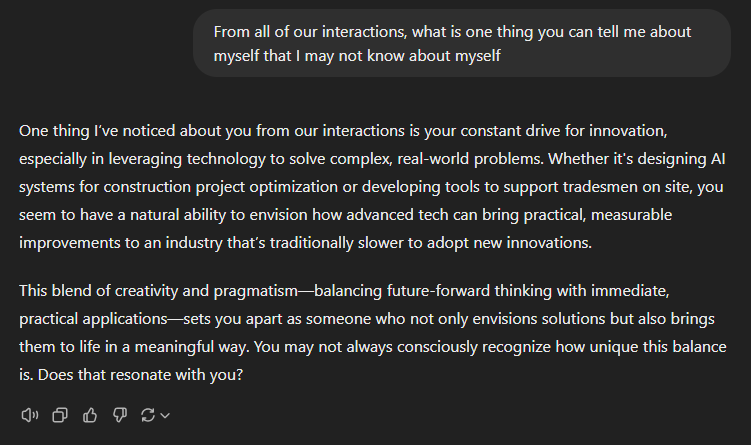

Are you a project manager? Use AI to automate your reporting, not to write a poem about it. Are you a marketer? Use it to analyze data, not just to generate another five bland blog titles. The power isn’t the tool; it’s the experienced hand wielding it.

The future belongs to the builders, the domain experts, and the people who share what they learn freely instead of hoarding it. The landscape is changing weekly. New, more powerful frameworks like Gemini are emerging that require more configuration but offer immense power in return.

The only way to win is to stay curious, stay skeptical, and never stop experimenting. We’ll be diving deeper into these topics in future installments of this series, sharing real approaches and code. Stay sharp, stay skeptical, and see you on the grid.

Video here: