As software and standards mature and even become archaic, they inevitably attract baggage along the way. Years of technical debt amassed by well-intentioned developers and product managers will be paid for by us and our children.

This is particularly evident when we start talking about the Id of Revit elements, and the IFC GUID syntax. As most of you are aware, Revit carries a number of different identifying attributes for each and every element. Here is a basic list:

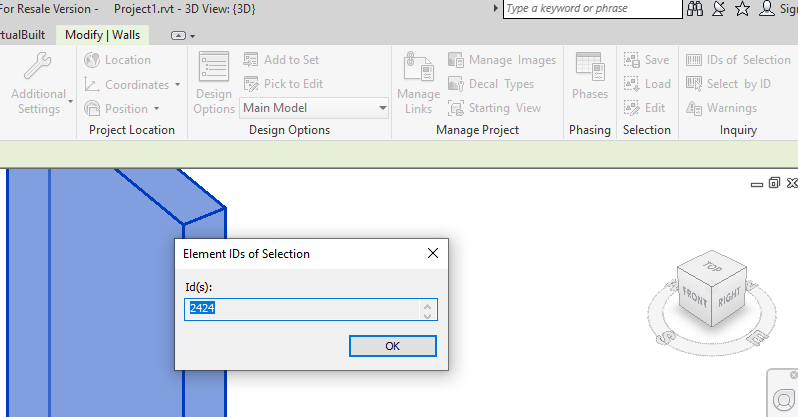

- Element Id – the numerical form of a Revit Id, you can interact directly with this using the Select By Id command in Revit

- UniqueId – “A stable unique identifier for an element within the document.” This is not a correctly formed UUID, but rather a concatenation of a standard UUID for the Revit EpisodeId (session based), along with the Revit element Id. From Jeremy’s post: similar to the standard GUID format, but has 8 additional characters at the end. These 8 additional hexadecimal characters are large enough to store 4 bytes or a 32 bit number, which is exactly the size of a Revit element id.

- DwfGuid (Export Guid) – this is a correctly formed UUID in .NET / standard format

- IfcGuid – identical to DwfGuid, but encoded differently according to IFC rules created in the mid-90s. At the time, it was deemed worthwhile to ‘compress’ the IFC Guid to the shorter form we still have to deal with today.

It would be nice if Revit and IFC shared a single common unique identifying syntax, but unfortunately this is not the case.

The above Ids do actually bear some predictable relationship to each other. The UniqueId is formed by Revit joining the EpisodeId and the encoded Element Id, the Dwf or Export Guid is created by an algorithm that has a number of conditional rules, and the Dwf Guid can be converted to the backwards-compatible IfcGuid format through a different algorithm, originally created in C. Those algorithms can be sourced in various places (see links in Further Reading below).

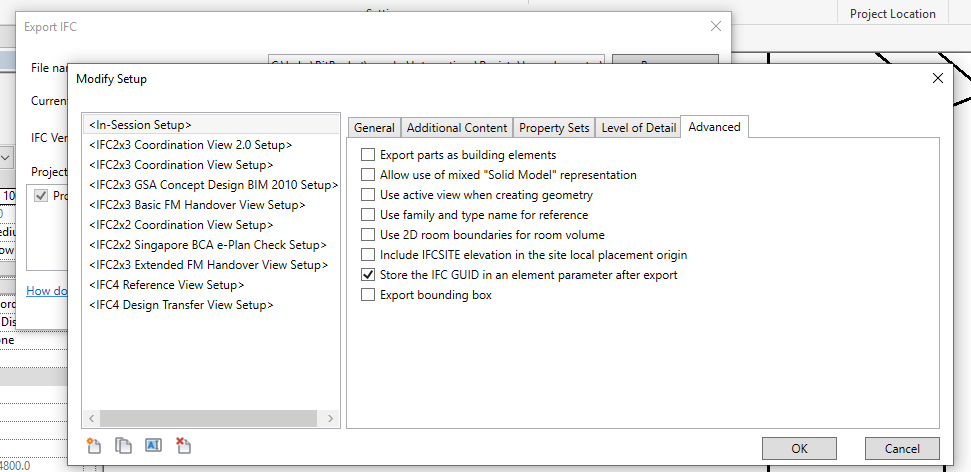

Also, some of these get exposed in different ways – if you create Element-bound BCF files, they will typically show the IFC Guid syntax. Further, if you export an IFC file from Revit and tick the option on the Exporter, it will write the IfcGuid to a parameter on the element.

You can query the Element Id directly in Revit (Modify ribbon, Inquiry panel, IDs of Selection):

However, for the purpose of this post, let’s assume you are using Dynamo with IronPython, and you want to query all 4 Ids from an element.

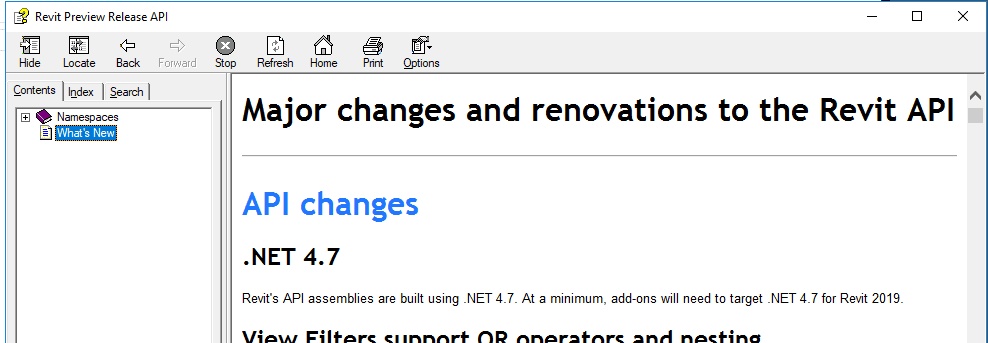

We at least need to import the Revit API and the Revit IFC API:

clr.AddReference("RevitAPI")

import Autodesk

from Autodesk.Revit.DB import *

clr.AddReference('RevitAPIIFC')

from Autodesk.Revit.DB.IFC import *

Following this, we can use the various Dynamo and Python commands to access the Ids:

elementIds, uniqueIds, DwfGuids, IfcGuids, successlist = [], [], [], [], []

for i in e:

try:

elementIds.append(i.Id)

uniqueIds.append(i.UniqueId)

DwfGuids.append(ExportUtils.GetExportId(doc, ElementId(i.Id)))

IfcGuids.append(ExporterIFCUtils.CreateSubElementGUID (UnwrapElement(i),0))

successlist.append("Success")

except:

successlist.append("Failure")

OUT = elementIds, uniqueIds, DwfGuids, IfcGuids, successlist

Notice the commands and properties used:

- Element Id – query the Element.Id member

- Unique Id – query the Element.UniqueId member

- Dwf or Export Guid – use the ExportUtils.GetExportId method

- IfcGuid – use the ExporterIFCUtils.CreateSubElementGUID method (index 0 refers to the main element itself)

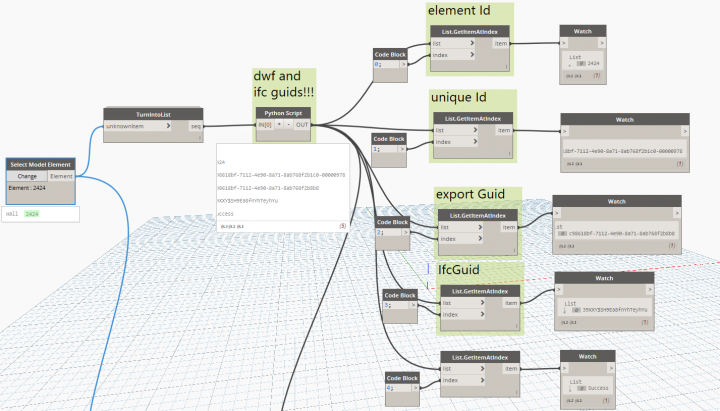

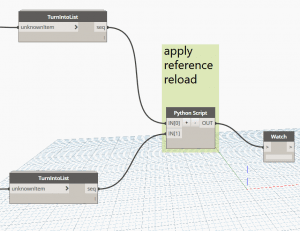

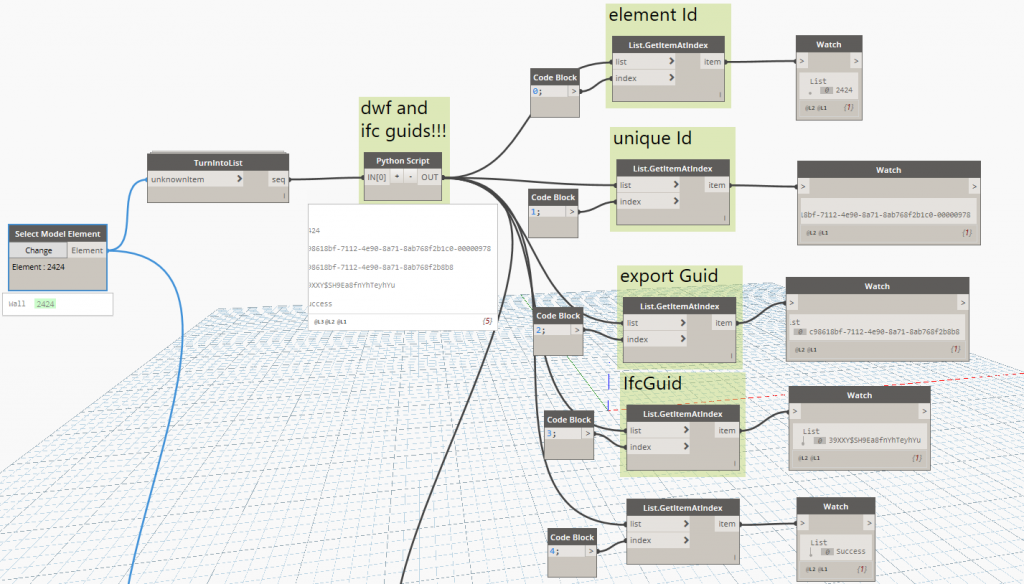

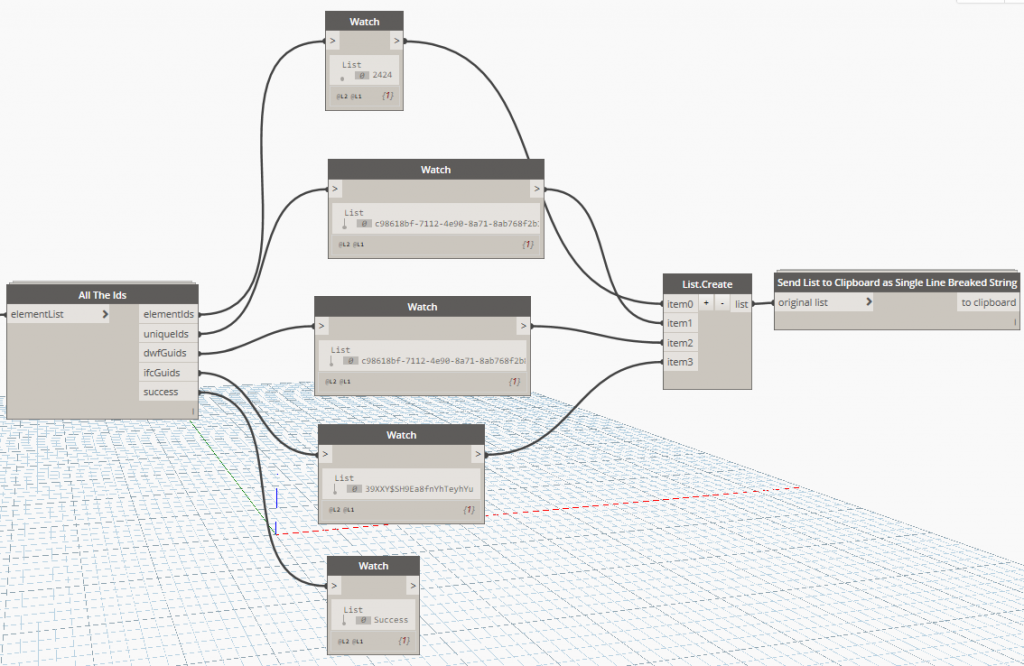

From here, I create a Dynamo node that eats Revit elements and gives us back these Id values:

This node will be published in the Bakery package on the package manager, and to Github.

Further, our VirtualBuiltApp platform has been developed to store and query multiple Ids for a single element.

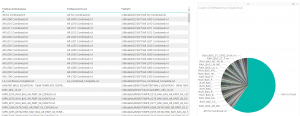

Example output from the Dynamo / Python (initial test showed the Dwf Guid is still a .NET object, that probably should get converted to a string for consistency).

[2424] ['c98618bf-7112-4e90-8a71-8ab768f2b1c0-00000978'] [<System.Guid object at 0x0000000000000071 [c98618bf-7112-4e90-8a71-8ab768f2b8b8]>] ['39XXY$SH9Ea8fnYhTeyhYu']

I added str() to the Python:

Final test showing the 4 different Id values for a single object:

2424 'c98618bf-7112-4e90-8a71-8ab768f2b1c0-00000978' 'c98618bf-7112-4e90-8a71-8ab768f2b8b8' '39XXY$SH9Ea8fnYhTeyhYu'

Further reading:

- Revit API notes on exporting the Ifc GUID

- Detailed post from 2009 by The Building Coder on element Ids

- RFC 4122 specification for UUIDs

- Post from 2013 by Matteo Cominetti

- BuildingSmart IFC GUID specification including code examples

- IFC GUID decompression library example

- Open Source IFC Parser code from Jon Mirtschin (Geometry Gym) – includes DecodeGlobalID and EncodeGuid