I previously posted about how to quickly repath links based on some control mechanisms. Enter BIM 360, and the wild world of Revit cloud worksharing… I expect that it will be commonplace now for existing projects and datasets to move across to BIM360 ‘mid project’. But that creates some interesting problems, like creating folders, dealing with the initiation process, and replacing local Revit Links with their cloud versions.

This post is focused on that process of changing all of the Revit link paths to link to the BIM 360 models. Unfortunately, the previous method I used (TransmissionData, like eTransmit) is not available for cloud hosted models. So how do we automate this process?

We went about it this way:

- Initiate all Revit models on the BIM 360 Document Management cloud (manually, for now)

- Create one federated model on the BIM 360 cloud that links in all the other cloud hosted Revit models. You might do this one manually, using Reload From in the Manage Links dialog box.

- Once you have that one ‘super host model’, use a batch process to harvest all of the cloud model data

- Using the harvested data, create a script that implements a Reload From method to batch reload local models from their cloud counterpart

On the journey to solving step 3, I experimented with a few different methods. I discovered that you need to use the ExternalResource class to get information about BIM 360 cloud models (not ExternalReference).

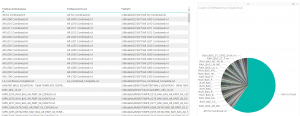

I also realised that I had to deal with Reference Information, which appears to be a .NET dictionary per link that stores some funky Forge IDs and so on. But I want to store all this data in our VirtualBuiltApp BIM Management system, so I had to serialise the Reference Information to a string that could be stored in a database VARCHAR field (or push to Excel if you are still doing things the old way). Dimitar Venkov gave me a few tips about using JSON with IronPython in Dynamo (thanks mate!), so after that all the harvesting pieces were in place!

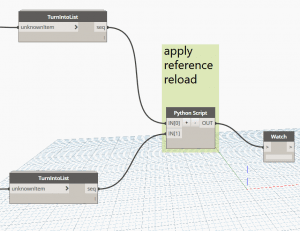

Here is some of the harvesting and JSON code. Notice that I played around with using a container class to pass data between Dynamo nodes. In the end, JSON string was the answer:

data = [] for u in unwraps: data.append(u.GetExternalResourceReference(linkresource)) class dummy(object): def ToString(self): return 'container' container = dummy() sdicts = [] for y in data: dictinfo = ExternalResourceReference.GetReferenceInformation(y) container.dictinfo = dictinfo infos.append(container) shortnames.append(ExternalResourceReference.GetResourceShortDisplayName(y)) versionstatus.append(ExternalResourceReference.GetResourceVersionStatus(y)) insessionpaths.append(y.InSessionPath) serverids.append(y.ServerId) versions.append(y.Version) sdicts.append(json.dumps(dict(dictinfo)))

The next step was to create the ‘batch reload from’ tool. Now that we had the necessary data, we just had to use it to grab the matching cloud path information (from our database) and apply it to each Revit link.

I created a node that essentially built a new reference path from the JSON and other data that we had harvested. Here is some of that code:

des = [] for x in referencesInfo: des.append(json.loads((x))) newdicts = [] for y in des: newdicts.append(Dictionary[str, str](y)) serverGuids = [] for g in serverIdsIn: tempguid = Guid(g) serverGuids.append(tempguid) newrefs = [] for z in range(len(referencesInfo)): serverIdIn = serverGuids[z] referenceInfo = newdicts[z] versionInfo = versionsInfo[z] sessionPathIn = sessionsPathIn[z] tempRef = ExternalResourceReference(serverIdIn, referenceInfo, versionInfo, sessionPathIn) newrefs.append(tempRef) OUT = newrefs

The final step was to get a RevitLinkType and a matching ReferenceInformation and apply them to each other. I stored the data in our cloud based BIM Management Application, VirtualBuiltApp. Then I could easily just pull the data into Dynamo with a suitable database connector, and match up the RevitLinkType in the current file with its associated cloud identity. For that genuine 90s feel, you could use Excel to store the data as it is just a JSON string and some other strings:

Here is the key bit of code that actually changes the link path (without all of my other error checking bits and pieces):

try:

newCloudPath = newCloudPaths[l]

reloaded = fileToChange.LoadFrom(newCloudPath, defaultconfig)

successlist.append(reloaded.LoadResult)

TransactionManager.Instance.ForceCloseTransaction()

except:

successlist.append("Failure, not top level link or workset closed")

To actually implement the script and get productive, I opened 4 instances of Revit, and then used this process in each instance:

- Open the Revit file from BIM 360, with Specify… all worksets closed

- Unload all links

- Open all worksets

- Run the Reloader Script

- Confirm link status in Manage Links

- Optional: Add ‘bim 360 links loaded’ text to Start View (just for tracking purposes)

- Optional: Add comment to VirtualBuiltApp (optional, for tracking purposes)

- Close and Sync

In this way I can have 4 or more sessions operating concurrently, fixing all the link paths automatically, and I just need to gently monitor the process.

One nice thing is that I set the script up to immediately Unload a link after it had obtained and applied the new Path information. This means that the Revit instance does not get bogged down with many gigs of link data in memory, and in fact this is way faster than trying to use Manage Links for a similar process.

Ideally I would like to fully automate this, to the point where it opens each file, runs the script, and syncs. Unfortunately, time didn’t allow me to get all the code together for that (for now).

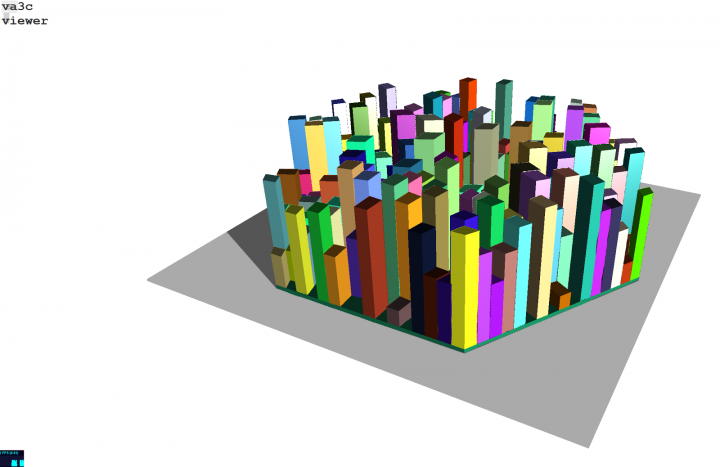

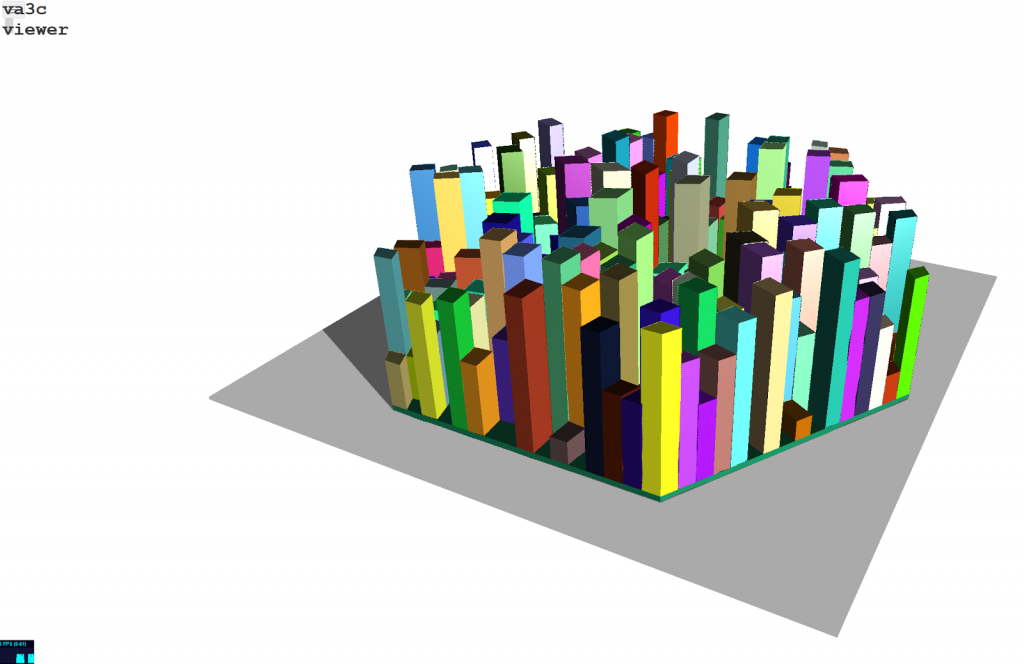

Finally, because we are using our custom built schema and validation tools, we can easily create visuals like this:

Modified versions of the Dynamo graphs can be found on the Bakery Github here: